Learning based clustering of streamed process data for the efficient modelling and prediction of characteristics in milling processes based on the integration of domain knowledge – ClusterSim

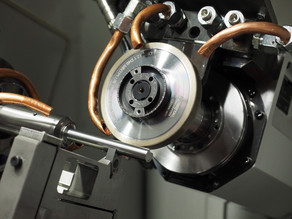

The high flexibility and diverse possibilities of milling increasingly require a specifically optimized process design. In order to increase productivity and efficiency of cutting manufacturing processes, milling operations are therefore increasingly being designed on the basis of simulations. Innovative developments such as kinematically highly complex process strategies expand the degrees of freedom and parameter spaces for optimizing manufacturing processes. Thereby, the number of process characteristics increases significantly, which leads to new modeling challenges. Within the scope of the presented project initiative “ClusterSim”, an approach for the representation of milling processes shall be pursued, which is not based on the detailed modeling of a multitude of physical quantities and their interactions, but is largely observation-based. Complex processes are to be divided into elementary increments by using methods of cluster analysis. Machine learning (ML) models are then trained for each elementary increment to predict process characteristics, such as tool vibration and surface properties of the milled workpiece, based on measured and simulated features, such as chip thickness and cutting speed. For this purpose, the relevant data must be acquired both experimentally and simulatively and be synchronized in time. For the simulation, a geometric physically-based approach shall be used. The use of ML models shall not be realized as a “black box” application. Instead, both the applicability of the methods to be calibrated and the quality of the results are to be improved by an integration of expert knowledge (domain knowledge), e.g. by determining the number of necessary clusters and identifying the features that can be measured and simulated and which contribute maximally to the prediction quality. Furthermore, the design of an efficient milling process for training the models will be developed. This will be realized in combination with active learning. Thus, the minimum training set can be identified, which produces a maximum variation of the feature values in order to cover the parameter spaces as homogeneously as possible.

For further/new development and evaluation of ML methods, freely available data sets are often used, which have often been generated synthetically. These data are often free of disturbance variables and differ from production-related measurement data. Measurement data acquired in production engineering are often noisy, influenced by disturbance variables and do not always contain only relevant information. Unfortunately, such data sets are not made freely available. Within the scope of the proposed research project, a concept for process and research data management is to be developed and all training data used for modeling is to be made available to other researchers. For this purpose, the repository “Zenodo”, which is developed and operated by the European Organization for Nuclear Research (CERN), will be used.

![[Translate to English:] [Translate to English:]](/storages/isf-mb/_processed_/6/9/csm_AG_SimPro_Eyecatcher_1920p--_5f288a9cd4.jpg)